A Curious Question, A Catastrophic Result

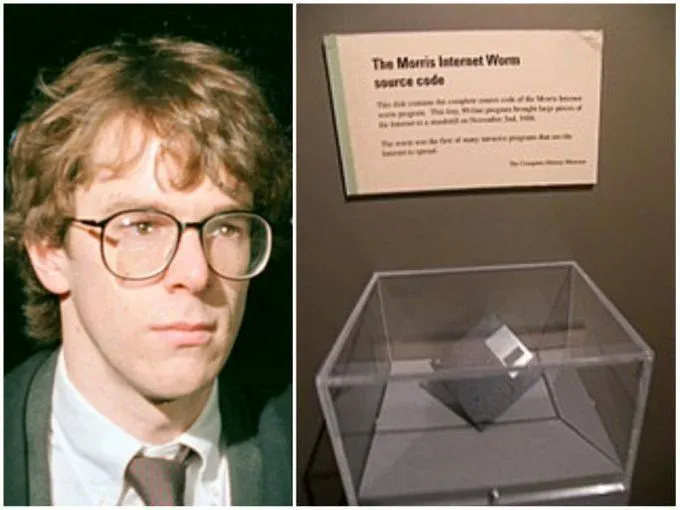

On November 2, 1988, at 8:30 PM, a 23-year-old Cornell graduate student named Robert Tappan Morris had a simple question: How big is the internet?

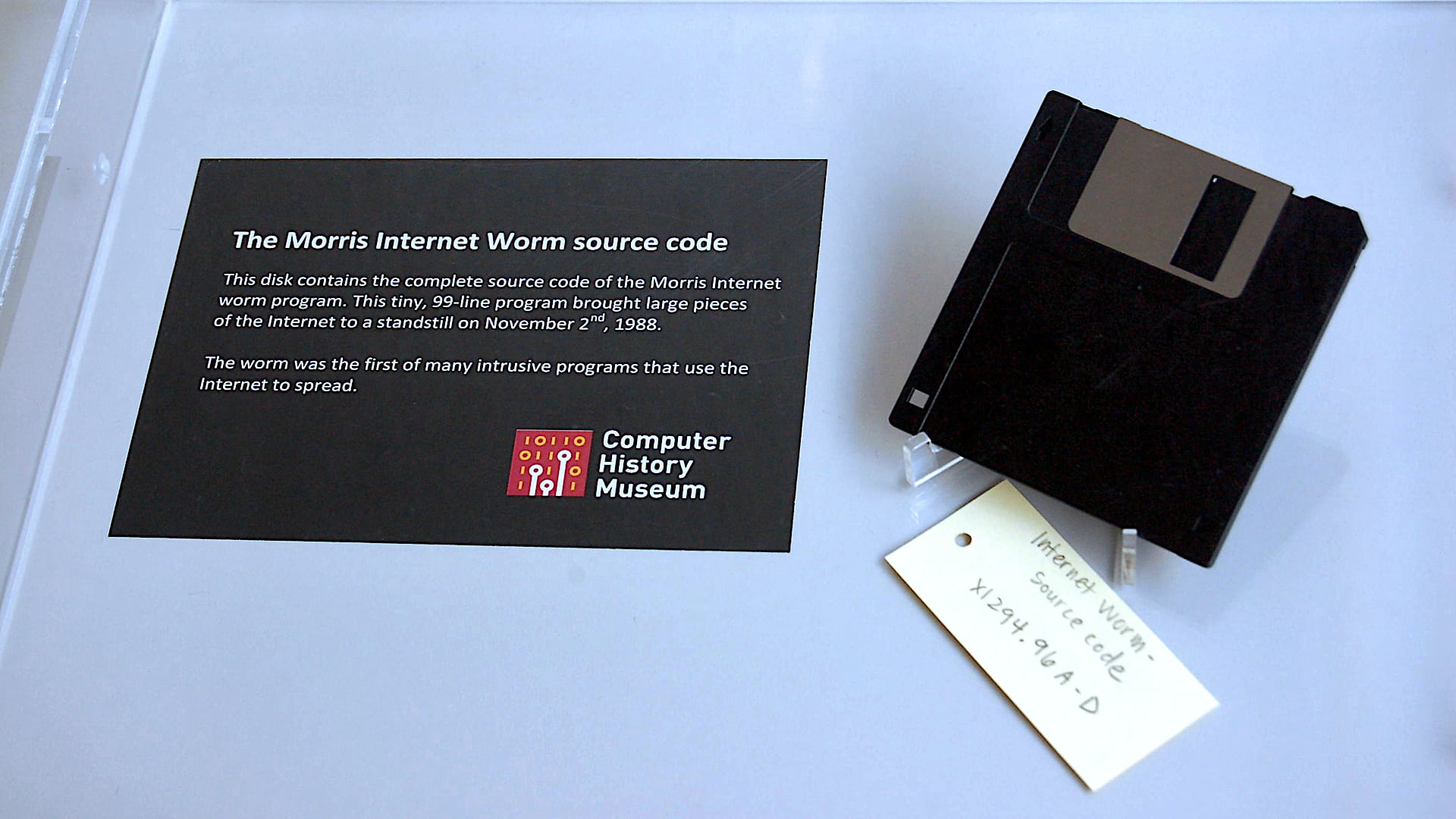

To find out, he wrote 99 lines of code—a self-replicating program designed to quietly count computers on the network. He released it from an MIT computer (to hide his tracks) and went to dinner.

By the time he got back, he’d accidentally crashed 10% of the entire internet.

What Happened

Within 24 hours, about 6,000 of the 60,000 computers connected to the internet were grinding to a halt. Harvard, Stanford, NASA, and military research facilities were all affected. Vital functions slowed to a crawl. Emails were delayed for days.

The problem? A bug in Morris’s code. The worm was supposed to check if a computer was already infected before copying itself. But Morris worried administrators might fake infection status to protect their machines. So he programmed it to copy itself anyway 14% of the time—regardless of infection status.

The result: computers got infected hundreds of times over, overwhelmed by endless copies of the same program.

“We are currently under attack,” wrote a panicked UC Berkeley student in an email that night.

The Aftermath

The Morris Worm caused an estimated $100,000 to $10 million in damages. Morris became the first person convicted under the Computer Fraud and Abuse Act, receiving three years probation, 400 hours of community service, and a $10,000 fine.

But here’s the thing—Morris didn’t have malicious intent. He genuinely just wanted to measure the network’s size. His creation accidentally became the first major wake-up call for internet security.

The incident led directly to the creation of CERT (Computer Emergency Response Team) and sparked the development of the modern cybersecurity industry. The New York Times even used the phrase “the Internet” in print for the first time while reporting on it.

Why November 30th?

In direct response to the Morris Worm, the Association for Computing Machinery established Computer Security Day just weeks later. They chose November 30th specifically—right before the holiday shopping season—because cybercriminals love exploiting busy, distracted people.

That advice is even more relevant 37 years later.

1988 vs. 2025: A Quick Comparison

Consider how things have changed:

Then: 60,000 computers connected to the internet.

Now: Over 15 billion devices.

Then: Total damage from Morris Worm: $100K-$10M.

Now: Average cost of a single data breach: $4.44 million.

Then: Attack motivation was curiosity.

Now: 97% of attacks are financially motivated.

Yet some things haven’t changed. The Morris Worm exploited weak passwords and unpatched systems—the same vulnerabilities that cause most breaches today.

What This Means for You

Computer Security Day isn’t just history—it’s a reminder that the basics still work:

• Multi-factor authentication stops 99.9% of account compromises

• Regular, tested backups can save your business from ransomware

• Employee training dramatically reduces successful phishing attacks

And yes—the holiday season really is prime time for attacks. Stay vigilant through January.

One More Thing

Robert Morris never went to prison. After completing his sentence, he co-founded Y Combinator (the startup accelerator behind Airbnb, Dropbox, and Reddit) and became a tenured professor at MIT—the same school where he launched his infamous worm.

In 2015, he was elected a Fellow of the Association for Computing Machinery—the organization that created Computer Security Day in response to his attack.

The lesson? The person who exposed the internet’s greatest vulnerabilities is now part of the establishment working to secure it. Threats evolve. Defenses must evolve too.

The question is: will yours?

Take Our 2-Minute Security Assessment →

centrexIT has been protecting businesses since 2002. Questions about your security? Let’s talk.